Are you sure that what you just read, saw, or heard on the Internet was created by a person? In the age of generative artificial intelligence, this question is no longer paranoid. It is, in fact, fundamental.

We live in a time where algorithms not only organize the content we consume, but also create it. From articles to hyper-realistic images, fake songs from your favorite artists to completely fabricated videos, AI-generated content is radically transforming the web.

The content in the following video has been generated using a static image and converted to video by an AI.

More than half of the Internet is already the work of AI... and soon 90%?

A study by Amazon Web Services estimates that 57% of online content is already generated or translated by artificial intelligence. And the boldest prediction comes from experts such as Nina Schick and Europol: by 2026, up to 90% of content on the Internet could be synthetic.

In marketing, this phenomenon is already underway. According to Gartner, 30% of corporate messages will be generated by AI by 2025. This is not science fiction: it is the reality of an Internet that is changing faster than we can imagine.

Deepfakes: when digital fiction becomes dangerous

Deepfakes are the most visible and disturbing example. Remember the image of Pope Francis wearing a Balenciaga coat? It was completely fake, created by Midjourney. Even more serious was the image of an explosion at the Pentagon, also generated by AI, which caused a brief stock market crash before being disproven.

Even the music has been affected. The song “Heart on My Sleeve,” which sounded like an actual collaboration between Drake and The Weeknd, was created with AI. It went viral before being taken down due to copyright issues.

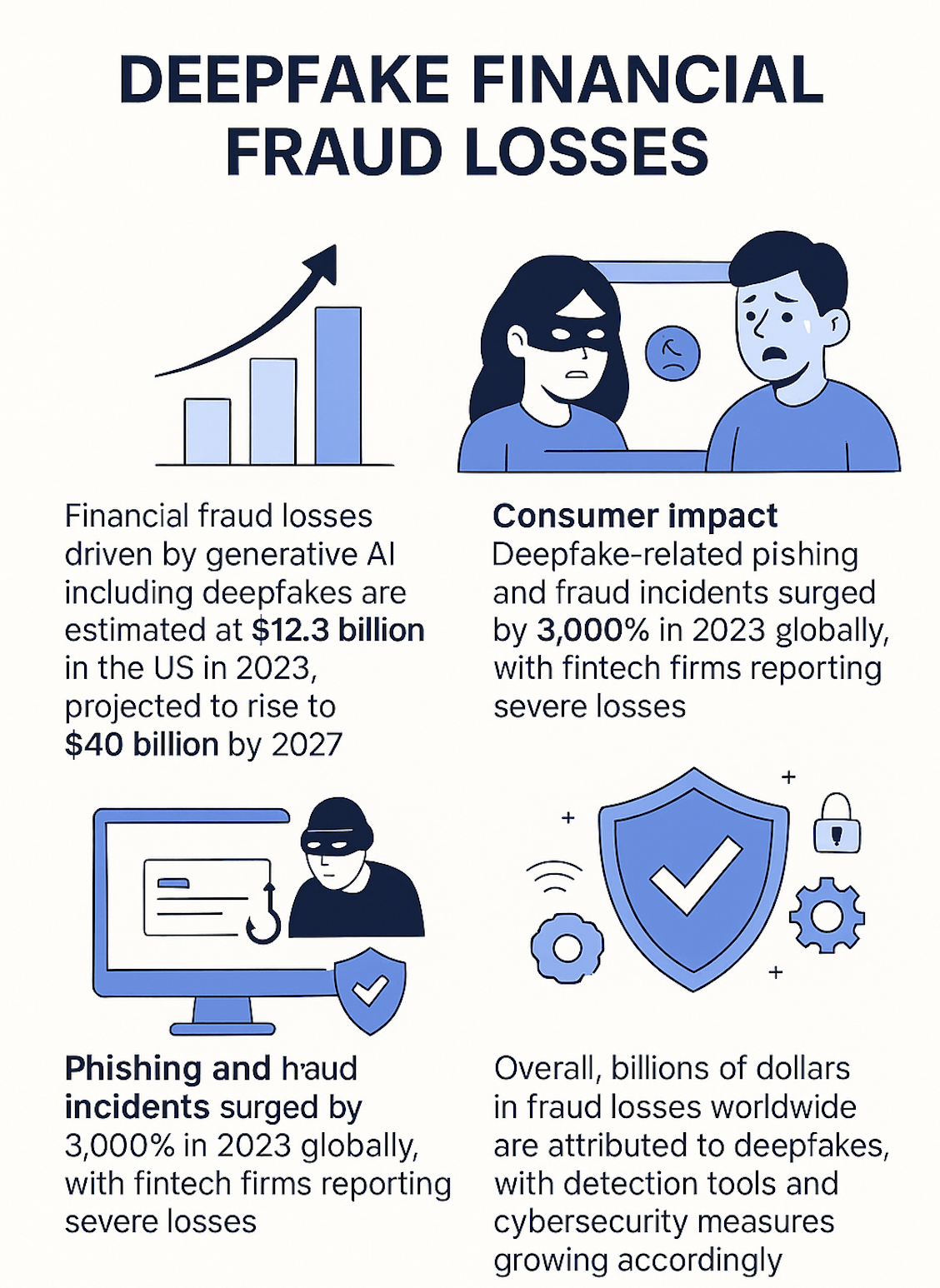

If you notice, every day and on all platforms, you can see for yourself how numerous public personalities, content creators, and celebrities are used in multiple content to generate these Deepfakes. Using these ruses, they will try to scam you. Do not fall into the trap, as these scams are growing exponentially.

How do you know what is real? Google has an idea

To counteract this tsunami of synthetic content, companies such as Google have developed tools such as SynthID, a system of invisible watermarks that can identify whether AI has generated an image or audio.

Although promising, this technology will only be useful if it is massively adopted. For now, we live in an era where the artificial and the authentic are dangerously confused.

Real impacts: privacy, trust, culture

The rise of AI-generated content is not only technical, but also ethical and social. Among the main challenges:

- Disinformation: Deepfakes can be used to manipulate elections, create false scandals or erode public trust.

- Digital violence: In cases such as the Almendralejo case, teenagers used AI to create fake images of naked female classmates. More than 90% of pornographic deepfakes involve women.

- Authorship crisis: Who is the true author of an AI-created work? What happens to the rights of artists whose style has been imitated without consent?

What can we do?

Here are some keys to adapt (and protect ourselves):

- Develop critical thinking: Not everything you see is real. Learn to detect signs of synthetic content. From now on, for example, when you visit a short on a social network of a relevant person you follow that looks suspicious, doubt it from the start, as it could be false.

- Support transparency: It requires platforms to label AI-generated content clearly. In this sense, techniques such as SynthID could be beneficial if they become popular.

- Protect your privacy: Control what you share on networks. Anyone could use your image without your consent. In this post I showed you how with a few minutes of video and a small audio sample you could build a deepfake.

- Use AI to your advantage: It’s not all negative. AI can be an ally to learn, create or automate tasks if used ethically.

- Participate in the debate: Don’t let others decide for you. The laws that regulate this technology should reflect our values as a society.

AI learning from AI: the danger of model collapse

A growing risk is the so-called “model collapse,” which occurs when AI models are trained on content generated by other AIs, rather than learning from real human data. This cycle causes models to lose accuracy and drift further and further away from reality, amplifying errors and biases. Even a small amount of synthetic content can “poison” training, creating a loop of misinformation. If we don’t protect authentic data, we could end up with an artificial intelligence trained to replicate its own failures.

We are entering a new stage of the Internet. One where content is not only shared, but also mass-produced with an ease never seen before. In this scenario, the key question is no longer just “who said it?” but “who (or what) created it?”

Are we prepared for a world where almost everything we see online will be the work of an AI? Have you recently come across content that you later discovered was generated by AI? Do you think AI creators should be required by law to label their works? How should people’s privacy be protected in this new digital age? What do you think about the use of AI to create art, music, or literature? Do you find it valid, or do you think it detracts from the human?

We still have time to define the rules of the game, but that implies being informed, critical, and, above all, acting responsibly.

Please leave me your comments and reflections, I’m interested in your vision on this phenomenon that is changing our screens… and our world!

Have a good week!